SEC504: Hacker Tools, Techniques, and Incident Handling

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usConnect, learn, and share with other cybersecurity professionals

Engage, challenge, and network with fellow CISOs in this exclusive community of security leaders

Become a member for instant access to our free resources.

Sign UpMission-focused cybersecurity training for government, defense, and education

Explore industry-specific programming and customized training solutions

Sponsor a SANS event or research paper

We're here to help.

Contact UsCyber threat intelligence (CTI) teams are frequently asked to provide metrics for leadership to validate their contributions

This blog was cowritten by John Doyle, Gert-Jan Bruggink, Steven Savoldelli, and Callie Guenther.

Cyber threat intelligence (CTI) teams are frequently asked to provide metrics for leadership to illustrate their contributions in helping improve the organization’s security posture and reducing risks. However, most CTI programs, especially those starting down this path, tend to create weak metrics centered solely around production throughout. These are often viewed as “low hanging fruit” and often are misaligned with the intent behind the metrics creation ask. While performing metric evaluations on throughput may serve as an initial step, ultimately, CTI teams should aim to demonstrate meaningful insights that go beyond routine measurements. Metrics that genuinely reflect program impact and maturation require careful planning.

In this article, we examine why organizations struggle to conceptualize and develop effective metrics for CTI programs before presenting a practical guide for metrics categorization that CTI teams can leverage to develop meaningful program measures. Throughout this blog, we showcase examples of how CTI metrics align to actionable intelligence, risk reduction, and business impact using our categorization taxonomy. We conclude with parting thoughts, highlighting previous research produced specifically around CTI metrics.

Before delving into the blog’s substance, we’d first like to provide many thanks to Callie Guenther, Braxton Scholz, Chandler McClellan, Rebecca Ford, Katie Nickels, Brandon Tirado, Greg Moore, Jonathan Lilly, and others who helped start us down this path at the SANS CTI Summit 2024 workshop we hosted on how to build an effective CTI Program.

Metrics are one modality that a cybersecurity function can – and often does – use to measure effectiveness and efficiency. Yet, measuring the value cybersecurity provides for an organization is a daunting, challenging, and cumbersome task with unclear benefits to most managers assigned to create them. Perhaps this is because of lack of exposure, training, desire, aptitude, perceived value, or any number of other reasons. That is, besides the glaring elephant in the room: it is challenging to show the value of improved decision-making, cost savings from mitigated incidents, and agility in responding to a dynamic threat landscape, especially in a quantifiable way.

To properly create metrics that showcase the value of CTI services, collaborative systems thinking is crucial. This approach should account for factors such as brand reputation, consumer trust, legal consequences, employee productivity, and morale, beyond the shortcomings associated with solely using the traditional cybersecurity triad of confidentiality, integrity, and availability. This process becomes even more complex when developing meaningful metrics for programs like CTI, whose role is to inform and enhance decision-making among defenders, risk managers, and cybersecurity leadership. Through it, though, we should strive to work with partner teams – stakeholders – to garner an understanding of the impact of our work, driving security outcomes for the organization. A unified story–often conveyed through metrics– can effectively demonstrate how the CTI function strengthens organizational resilience, reduces cyber risk, protects against regulatory fines, and safeguards brand reputation, thereby justifying the cost of staffing and maintaining a CTI program.

Before cybersecurity and threat intelligence leadership rush to decree that all programs need metrics, a more effective starting point would be to determine what the program wants to measure, why, and what outcomes it will drive by capturing these measurements. Metrics should serve as a means to an end. Organizations should first establish the purpose of the metrics they intend to capture and clarify how they plan to use this information to drive business decisions. For example, for leadership and management it may be that we measure and demonstrate value to those who have a say in our funding and existence – namely highlighting our raison d'être. For our peers, it may be that we measure their expected outcomes and how CTI has aided in helping them achieve these.

The process of developing metrics demands an administrative cost that often exceeds merely collecting available data. For example, collecting relevant metric-supporting data may require building new technology, processes, and workflows to gather, store, and display metrics that serve a specific, exploratory purpose. Capturing metrics solely for their own sake is a misstep that can lead to wasted resources. Instead, data collection should support business outcomes and use cases. For example, analyzing the intelligence requirements the team serviced over a fixed period of time can allow leadership to determine whether out-of-band stakeholder re-engagement is required to determine if continuing support is required for the team to produce intelligence products on a given topic or if the team can shift its focus to other pressing needs.

It is also worth noting that metrics are not the only method to showcase program success; qualitative accomplishments that highlight value across the organization should also be celebrated.

CTI teams, like many stakeholder-driven functions, face challenges in creating universally transferable metrics, which can vary by organization, scale, and stakeholder involvement. Below, we offer a taxonomy for constructing and evaluating meaningful metrics within CTI programs. This taxonomy serves as a foundation to guide teams in building metrics from the ground up, allowing the programs to elect one or more of these framings for use.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blte69bbfb446f58a97/678a905558fb6dd5d180f56e/Building_and_Evaluating_Metrics_A_Taxonomy_for_CTI_Metrics.jpegCTI programs should be thinking about metrics development to drive action, but it is often difficult for those not trained in metrics design or the mission outcomes it enables through the CTI and cybersecurity lens to conceive starting points. We propose that organizations think of these in three broad categories: Administrative, Performative, and Operational. Some overlap may exist between performative and operational metrics, especially in evaluating investments in security controls, tooling, and external datasets. Metrics in each category serve unique purposes, from cost planning to gauging resource utilization.

Arguably, there could be enough overlap between administrative and performative metrics to merge them into one category. The value in collecting a variety of different types of metrics is that it helps planning efforts as a cost center given the reality of finite resourcing. As noted, the method of reporting metrics should reflect the unique operational environment of an organization, so we encourage considering these other methods of examining metrics.

When designing metrics, consider the intended audience and the outcomes these metrics aim to support. A given metric may serve various purposes from helping CTI managers justify resource needs to highlighting areas of excellence or identifying routine concerns. Most often, the primary audience for metrics is senior leadership, but depending on industry or region, this may be partially driven by regulatory compliance or support headcount scrutiny or even audit.

To ensure relevance and impact, the metrics chosen for each audience should be directly aligned with key business outcomes. While tailored to different audiences, these metrics ultimately contribute to a unified understanding of how performance impacts business success.

These measures may then be used as fodder for a CTI manager to develop a business justification for additional headcount, highlight an area of excellence, or identify routine areas of concern to consumers. In cases where metrics are tied directly to intelligence requirements, capturing the stakeholders, desired impact, and realized impact in support of stakeholder outcomes is prudent. Common implementations of this metric type are often limited to consumer feedback along the criteria of timeliness, completeness, and actionability–both immediate action and to inform strategic planning.

Examples

Effective CTI programs leverage regular cadence syncs and consumer group integration to raise stakeholder awareness, grow the brand, expand organizational reach, and ensure cross-functional cooperation. Understanding the workflows of stakeholder teams is vital to demonstrating CTI’s impact. Metrics can measure the frequency and timing of CTI interactions, correlate team utilization via requests for information (RFIs), and measure feedback loops, collaborations, and brand advocacy across the organization.

Early-stage CTI teams may operate with minimal integration, placing a heavy burden on team members to educate consumers about CTI’s role. As integration improves, the goal is to move towards seamless alignment. Teams looking to build metrics based on organizational reach can benefit from lessons learned by industry peers with similar team size, composition, or constraints.

CTI leadership can demonstrate incremental improvement in trust with natural stakeholders, especially where progress was stymied. This can be achieved through breaking down organizational silos, overcoming difficult personalities, and creating opportunities for joint work production, training, or cross-team exposure. Additionally, cybersecurity senior management can explicitly recognize improved intra-team collaboration.

Examples:

Metrics vary in complexity based on access to data and need to engage partner teams. Low-complexity metrics, for instance, are defined as those that operate within the control of the CTI team and can include tracking the number of phishing emails reported by employees. Whereas high-complexity metrics rely on cross-team data, processes, and collaboration, which require accounting for additional administrative overhead.

High-complexity tasks–and their subsequent metrics capture–may involve significant collaboration, implicit assumptions, and inadvertent bias, leading to potential cascading errors. This underscores the need for the CTI program lead to remain vigilant during the metric creation and capture process. Balancing effort across different metric types is essential to ensure that the CTI team is not overburdened by overly complex metrics, while still capturing valuable insights that require cross-team collaboration. This balance allows for efficient resource allocation and maximizes the impact of the CTI program.

Examples:

Metrics may provide value either as point-in-time snapshots or as longitudinal data over extended periods. While extended time frames help identify trends and outliers, it becomes challenging to attribute outcomes to specific causes. Therefore, it’s critical to document factors that influence metric interpretation.

Note: structured data stored in a logical manner with proper tagging can be easily queried in spreadsheets or more powerful central intelligence systems like The Vertex Project’s Synapse to quickly create recurring reports and queries to view longitudinal trends. We provide the following two illustrations as examples of ways to store intrusion-related data structured in a way that is easy to query.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blta0f6b1cce5023e18/677d9544baaf225e33288285/cti-metrics-002.pnghttps://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt569b3a539776aaa6/677d954434b6b61c39bb4321/cti-metrics-003.pngExamples:

CTI programs that are starting to build metrics should be intentional about their creation, outlining clearly the purpose behind each. Ultimately, metrics are a means to an end. Period. Hard stop. As programs mature, they can develop metrics that are more strategic and complex, designed to unearth trends. However, experimentation is usually required in order to right-size these aspirational metrics.

There is a deeper discussion needed, however, about what to capture and when, as the CTI program’s demand, capacity, and capability grow. Once the specific threats, vulnerabilities, PIRs or stakeholder demands are clarified and documented, only then do you have a tangible CTI program to start with. Any metrics developed before this baseline level of maturity will fall victim to high noise, shifting contexts, and exceedingly fluid business processes.

As Rob Lee and Rebekah Brown emphasize in the SANS FOR578 course, the core metric for a CTI team is whether it meets stakeholder needs and demonstrates business impact. CTI teams should aim to provide straightforward answers to common program questions and establish a tangible program baseline, capturing specific threats, vulnerabilities, and stakeholder needs. As a customer service function, this is imperative to justify the CTI program’s existence.

The initial metrics a program creates should focus on data that is easily obtainable, minimally complex, and easy to interpret. As CTI teams develop familiarity with data collection, they can evolve through the taxonomy, capturing more nuanced and sophisticated metrics. Continuous improvement, supported by structured, repeatable data, is crucial for metrics-driven maturity.

Example:

Every metric created should be evaluated for what it might imply to an uninformed consumer, so that the CTI leadership practitioner can provide staff adequate onboarding to address pitfalls and errors analysts may encounter during routine delivery execution. The easiest way to do this is to consider implications and inferences around causality, assumptions, and gaps. This becomes critical when conveying metrics over time, as people may erroneously fill in knowledge gaps that are unsupported by evidence.

Examples:

Metrics not only convey performance but also serve as tools to secure buy-in from critical stakeholders. Effective metrics enable CTI programs to demonstrate responsible investment in security resources, communicate the rationale behind metric selection, and reveal insights that support data-driven decision-making. By engaging stakeholders in this process, CTI teams increase the likelihood of program support and build trust in the data used to inform cybersecurity strategies.

Growing a CTI program involves more than tracking metrics; it requires actionable insights that drive program alignment with organizational goals. This alignment covers practical elements such as processes, deliverables, and integrations that allow for consistent measurement.

Example:

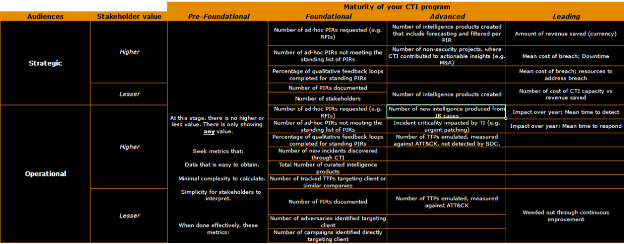

To end this, below is a visual representation of examples that have proven effective in supporting teams while also contributing to the organization’s internal maturity journey. This example table serves as a practical reference, illustrating various CTI metrics by role, audience, complexity, and timeframe.

Table 1: Sample CTI Metrics Table

Metric Type | Example | Role | Audience | Complexity | Time Frame |

Report Utility | Share of reports using licensed data sources | Administrative | Senior Management | Low | Point-in-Time |

Resource Allocation | CTI support to red team engagements | Performative | Red Team | Medium | Period of Time |

Threat Reduction Impact | Measured decrease in identified risks | Operational | Risk Management | High | Period of Time |

Consumer Feedback Rate | Frequency of RFI submissions | Integration | Consumer Teams | Low | Period of Time |

Cost-Benefit Analysis | Estimated savings from CTI-led mitigations | Operational | Finance | High | Point-in-Time |

CTI teams will continue to be asked to provide metrics to demonstrate their value to leadership, regardless of whether the request aligns with its intended purpose. Any CTI professional should approach such requests with as little ambiguity as possible, seeking clarification on the desired outcomes and leadership’s appetite to allocate additional resources should the intended goals exceed existing capabilities or capacity.

We would be remiss if we did not highlight existing CTI metrics resources:

We stewed on this quite a bit and hopefully the insights provided in this blog offer a solid starting point to categorically approach metrics generation. Feel free to follow us on social media for more content on this and other CTI topics:

Special thanks to Freddy Murre and Nicole Hoffman for their thoughtful peer review, questions posed, and substantive suggestions that improved the blog’s quality. And many thanks also to Koen Van Impe who created a most excellent graphic to quickly help summarize the main categories presented within this article.

John has over sixteen years of experience working in Cyber Threat Intelligence, Digital Forensics, Cyber Policy, and Security Awareness and Education.

Read more about John Doyle